![[Logo Padico]](img/padico-small.jpeg)

PadicoTM is the runtime infrastructure for the Padico software environment for computational grids.

PadicoTM is composed of a core which provides a high-performance framework for networking and multi-threading, and services plugged into the core. High-performance communications and threads are obtained thanks to Marcel and Madeleine, provided by the PM2 software suite. The PadicoTM core aims at making the different services running at the same time run in a cooperative way rather than competitive.

![[big picture PadicoTM]](img/padicotm-bigpicture-320.png)

PadicoTM exhibits standard interface (VIO: virtual sockets; Circuit: Madeleine-like API; etc.) usable by various middleware systems. Thanks to symbol interception by PadicoTM, middleware is unmodified and utilizes PadicoTM communication methods seamlessly. The middleware systems available over PadicoTM are:

These middleware systems used the PadicoTM core, thus they: take benefit from high-performance networks (Myrinet, Infiniband, SCI) where it is available; use high-performance Marcel multi-threading system; share their access to the network, without lowering the performance; are usable at the same time, in the same process; are dynamically loadable and unloadable.

Basically, every piece of code in PadicoTM is embedded into a module, namely a binary object (one or more ``.so'') and a description file (written in XML). Modules may be loaded, run and unloaded dynamically, on one node, on all nodes, or on a group of nodes. The core itself is composed of three modules: Puk (a nickname for Padico micro-kernel) is the foundation module. Its task is to manage modules (loading, running and unloading). The ThreadManager manages multi-threading in a coherent way; it provides hooks for periodic operations, and manages queues of I/O operations so that they do not block the whole process. The third module of PadicoTM core is called NetAccess. It multiplexes the network accesses so that several modules can use networks that require exclusive access otherwise. Hence different middlewares (CORBA, MPI,...) can efficiently share the same process and the same network without disturbing each other.

On top of the PadicoTM core, the abstraction layer of PadicoTM is built with freely and dynamically assembled components. Various communications methods are embedded in components that the user may assemble to get the needed communication stack. The available communication methods are:

The assembly process is driven by a configurable selector called NetSelector. Several implementations of NetSelector exist in PadicoTM. The main ones are:

The NetSelector configurator is a GUI designed for configuring the basic NetSelector. It lets the user graphically define its component assembly. However, it is more than just an assembly tool for software components. A configuration contains target cases which defines when to use a given assembly, e.g. we can define to use a given assembly between to given sets of machines.

Some screenshot of the NetSelector configurator follow. On the left,

screenshot of the topology panel describing the target

topology; on the right, an exemple of an assembly in the process of

being constructed.

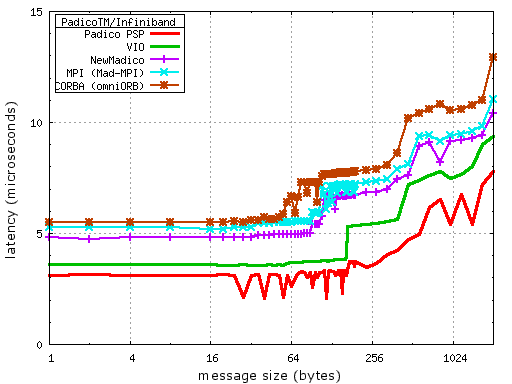

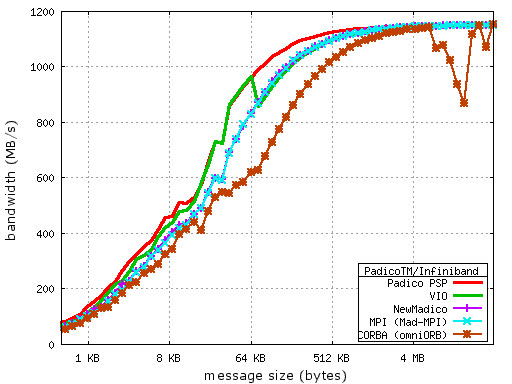

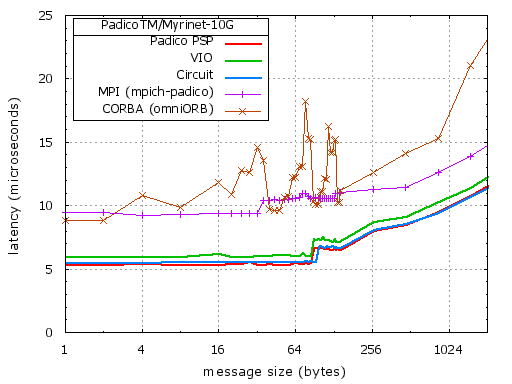

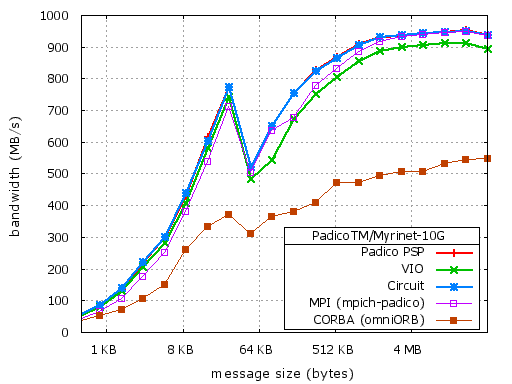

![[Logo Grid 5000]](img/g5k-small.jpeg) We have benchmarked PadicoTM over various networks.

The following figures reports the latency and bandwidth measured for

various PadicoTM API over Infiniband, Myrinet-10G, Myrinet-2000, and

SCI.

We have benchmarked PadicoTM over various networks.

The following figures reports the latency and bandwidth measured for

various PadicoTM API over Infiniband, Myrinet-10G, Myrinet-2000, and

SCI.

These experiments were performed mostly on the Grid 5000 experimental platform.

alexandre.denis@inria.fr